使用PyTorch进行数学运算

1、定义张量。

import torch

x = torch.zeros((3, 2))

y = torch.ones((3, 2))

z = torch.rand((3, 2))

print(x)

print(y)

print(z)输出结果

tensor([[0., 0.],

[0., 0.],

[0., 0.]])

tensor([[1., 1.],

[1., 1.],

[1., 1.]])

tensor([[0.9255, 0.9734],

[0.3468, 0.9825],

[0.3859, 0.7481]])2、访问张量。

import torch

a = torch.rand((5, 3))

print(a) # 全部

print(a[0]) # 第1行

print(a[0][2]) # 第1行第3列

print(a[1, 2]) # 第2行第3列

print(a[:2]) # 前3行

print(a[:, 1]) # 第2列输出结果

tensor([[0.9835, 0.6304, 0.3341],

[0.1006, 0.8246, 0.2030],

[0.7149, 0.7477, 0.7167],

[0.7997, 0.5218, 0.9917],

[0.9539, 0.2919, 0.0582]])

tensor([0.9835, 0.6304, 0.3341])

tensor(0.3341)

tensor(0.2030)

tensor([[0.9835, 0.6304, 0.3341],

[0.1006, 0.8246, 0.2030]])

tensor([0.6304, 0.8246, 0.7477, 0.5218, 0.2919])3、加减乘除运算、矩阵的乘法和逆运算。

import torch

x = torch.ones((2, 2)) * 2

y = torch.ones((2, 2))

a = x.add(y) # x + y

b = x.sub(y) # x - y

c = x.mul(y) # x * y,按元素乘法

d = x.div(y) # x / y

e = x.mm(y) # 矩阵乘法

f = x.t() # 矩阵的逆

print(a)

print(b)

print(c)

print(d)

print(e)

print(f)

输出结果

tensor([[3., 3.],

[3., 3.]])

tensor([[1., 1.],

[1., 1.]])

tensor([[2., 2.],

[2., 2.]])

tensor([[2., 2.],

[2., 2.]])

tensor([[4., 4.],

[4., 4.]])

tensor([[2., 2.],

[2., 2.]]){{info}}

注意:上述运算也可用+=*/表示。使用x.add、x.sub、x.mul、x.div时不会改变x的值,使用x._add、x._sub、x._mul、x._div会改变x的值。

{{/info}}

4、ndarray和tensor之间的转换。

import torch

import numpy as np

x_tensor = torch.randn(2, 3)

y_numpy = np.random.randn(2, 3)

x_numpy = x_tensor.numpy()

y_tensor = torch.from_numpy(y_numpy)

z_tensor = torch.FloatTensor(y_numpy)

print(x_numpy)

print(y_tensor)

print(z_tensor)

输出结果

[[-1.2588831 0.57915723 -1.0662982 ]

[-0.0852809 0.13274558 0.19468519]]

tensor([[ 1.4440, 2.3114, 0.6984],

[ 0.3031, -0.2362, 0.9197]], dtype=torch.float64)

tensor([[ 1.4440, 2.3114, 0.6984],

[ 0.3031, -0.2362, 0.9197]]){{info}}

注意:rand是创建均匀分布的随机张量或矩阵,randn是创建正态分布的随机张量或矩阵。

{{/info}}

5、GPU上的张量运算。

import torch

x = torch.rand(2, 3)

y = torch.randn(2, 3)

cuda = torch.cuda.is_available()

print("cuda available: %s" % cuda)

a = x.cuda()

b = y.cuda()

c = a + b

print(c)

a = a.cpu()

b = b.cpu()

c = a + b

print(c)输出结果

cuda available: True

tensor([[ 0.5795, 0.7328, 1.2017],

[ 1.3393, 0.9051, -0.1900]], device='cuda:0')

tensor([[ 0.5795, 0.7328, 1.2017],

[ 1.3393, 0.9051, -0.1900]])6、动态计算图。

import torch

x = torch.ones(2, 2, requires_grad=True)

print(x.data)

y = x + 2

print(y.data)

print(y.grad_fn)

z = y * y

print(z.data)

print(z.grad_fn)

t = torch.mean(z) # 每个元素求和 / 元素个数

print(t.data)

t.backward()

print(z.grad)

print(y.grad)

print(x.grad)输出结果

tensor([[1., 1.],

[1., 1.]])

tensor([[3., 3.],

[3., 3.]])

<AddBackward0 object at 0x0000027485E81DC0>

tensor([[9., 9.],

[9., 9.]])

<MulBackward0 object at 0x0000027485E81DC0>

tensor(9.)

d:\Project\LearnPyTorch\sample01\02.py:18: UserWarning: The .grad attribute of a Tensor that is not a leaf Tensor is being accessed. Its .grad attribute won't be populated during autograd.backward(). If you indeed want the .grad field to be populated for a non-leaf Tensor, use .retain_grad() on the non-leaf Tensor. If you access the non-leaf Tensor by mistake, make sure you access the leaf Tensor instead. See github.com/pytorch/pytorch/pull/30531 for more informations. (Triggered internally at C:\actions-runner\_work\pytorch\pytorch\builder\windows\pytorch\build\aten\src\ATen/core/TensorBody.h:485.)

print(z.grad)

None

d:\Project\LearnPyTorch\sample01\02.py:19: UserWarning: The .grad attribute of a Tensor that is not a leaf Tensor is being accessed. Its .grad attribute won't be populated during autograd.backward(). If you indeed want the .grad field to be populated for a non-leaf Tensor, use .retain_grad() on the non-leaf Tensor. If you access the non-leaf Tensor by mistake, make sure you access the leaf Tensor instead. See github.com/pytorch/pytorch/pull/30531 for more informations. (Triggered internally at C:\actions-runner\_work\pytorch\pytorch\builder\windows\pytorch\build\aten\src\ATen/core/TensorBody.h:485.)

print(y.grad)

None

tensor([[1.5000, 1.5000],

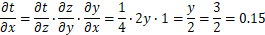

[1.5000, 1.5000]])计算过程

所以x的梯度是0.15,y和z不是叶子节点,没有梯度信息。

最后更新于10月前

本文由人工编写,AI优化,转载请注明原文地址: 使用PyTorch进行数学运算